Testing Micro Services

Testing Micro Services

The idea behind micro service architecture was discussed by Martin Fowler in a nice to read blog post, so I won't jump on that topic in detail here.

I recently had a short exchange with Ole Michaelis on Twitter about how to end-to-end test micro services. Since I didn't have time to make my whole case, Ole suggested that I blog about this, which I'm happily doing now.

The idea behind micro service architecture was discussed by Martin Fowler in a nice to read blog post, so I won't jump on that topic in detail here.

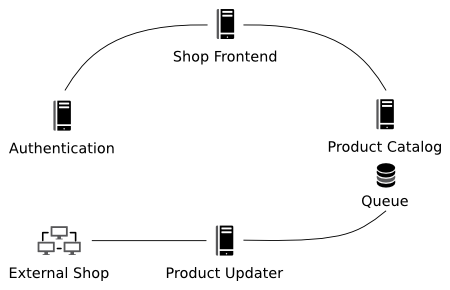

At Qafoo we run a flavor of what would today be called a micro service architecture for Bepado. I sketched a simplified fraction of that below. While the frontend is roughly a classical web application, the other shown components are modelled as independently deployable, slim web services. The Product Updater service is responsible for fetching product updates from remote shops. It validates the incoming data and queues the product for indexing. Indexing is then performed in a different service, the Product Catalog.

Extract of bepado architecture

Extract of bepado architectureWhile this shows only a small part of the full architecture, typical problems with system-testing such environments become evident. Sure, you can unit test classes inside each micro service and apply classical system tests to each service in isolation. But, how would you perform end-to-end system tests for the full application stack?

Trying to apply the classical process of "arrange, act, assert" did not work because of two reasons: a) there is a good portion of asynchronity (queuing) between services which prevents from reasoning about the point in time when a certain state of the system can be asserted. b) setting up the fixture for the full system to trigger a certain business behavior in isolation would be quite complex task.

A fix for a) could be to mock out the queues and have synchronous implementations for the test setup. Still, the accumulated service communication overhead (HTTP) makes timing hard. Again, you could mock out the service layer and replace it with something that avoids API roundtrips for testing. But this would mean that you need to maintain two more implementations of critical infrastructure for testing purposes only and it would make your system test rather unreliable, because you moved a large bit away from the production setup.

A similar argument applies to topic b): Maintaining multiple huge fixtures for different test scenarios through a stack of independent components is a big effort. Micro fixtures for each service easily become inconsistent.

For these reasons, we moved away from the classical testing approach for our end-to-end tests and instead focused on metrics.

The system already collects metrics from each service for production monitoring. These metrics include statistical information like "number of updates received per shop", various failover scenarios e.g. "an update received from a shop was invalid, but could be corrected to maintain a consistent system state", errors, thrown exceptions and more. For system testing we actually added some more metrics which turned out to be viable in production, too.

In order to perform a system test, we install all micro services into a single VM. This allows the developer to easily watch logs and metrics in a central place. A very simple base fixture is deployed that includes some test-users. In addition to that, we maintain very few small components that emulate external systems with entry points to our application. These "emulation daemons" feed the system with randomized but production-like data and actions. One example is the shop emulator, which provides product updates (valid and invalid ones) for multiple shops.

As a developer I can now test if the whole application stack still works in normal parameters by monitoring this system and asserting the plausibility of metrics. This helps us to ensure that larger changes, and especially refactorings across service borders, do not destroy the core business processes of the system.

While this is of course not a completely automated test methodology, it serves us quite well in combination with unit and acceptance tests on the service level: The amount of time spent for executing the tests is acceptable, while the effort for maintaining the test infrastructure is low.

What are your experiences with testing micro service architectures? Do you have some interesting approaches to share? Please feel invited to leave a comment!

P.S. I still wonder if it would be possible to raise the degree of automation further, for example by applying statistical outlier detection to the metrics in comparison to earlier runs. But as long as the current process works fine for us, I'd most probably not invest much time into research in this direction.